“My theory of change for working in AI healthtech” by Andrew_Critch

Manage episode 445268266 series 3364758

Content provided by LessWrong. All podcast content including episodes, graphics, and podcast descriptions are uploaded and provided directly by LessWrong or their podcast platform partner. If you believe someone is using your copyrighted work without your permission, you can follow the process outlined here https://ro.player.fm/legal.

This post starts out pretty gloomy but ends up with some points that I feel pretty positive about. Day to day, I'm more focussed on the positive points, but awareness of the negative has been crucial to forming my priorities, so I'm going to start with those. It's mostly addressed to the EA community, but is hopefully somewhat of interest to LessWrong and the Alignment Forum as well.

My main concerns

I think AGI is going to be developed soon, and quickly. Possibly (20%) that's next year, and most likely (80%) before the end of 2029. These are not things you need to believe for yourself in order to understand my view, so no worries if you're not personally convinced of this.

(For what it's worth, I did arrive at this view through years of study and research in AI, combined with over a decade of private forecasting practice [...]

---

Outline:

(00:28) My main concerns

(03:41) Extinction by industrial dehumanization

(06:00) Successionism as a driver of industrial dehumanization

(11:08) My theory of change: confronting successionism with human-specific industries

(15:53) How I identified healthcare as the industry most relevant to caring for humans

(20:00) But why not just do safety work with big AI labs or governments?

(23:22) Conclusion

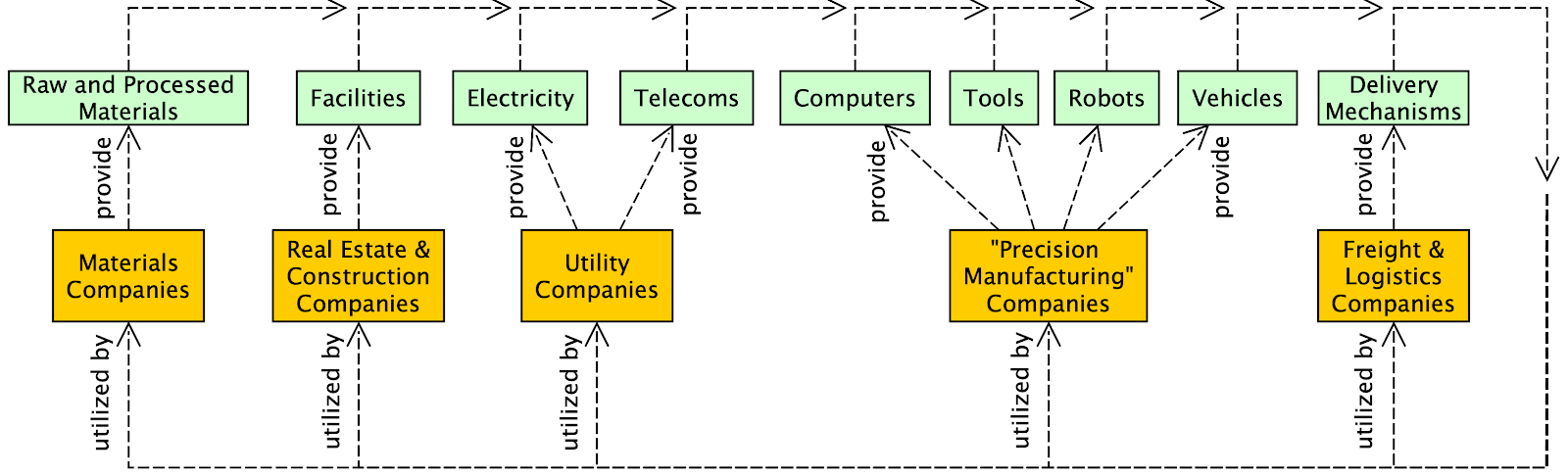

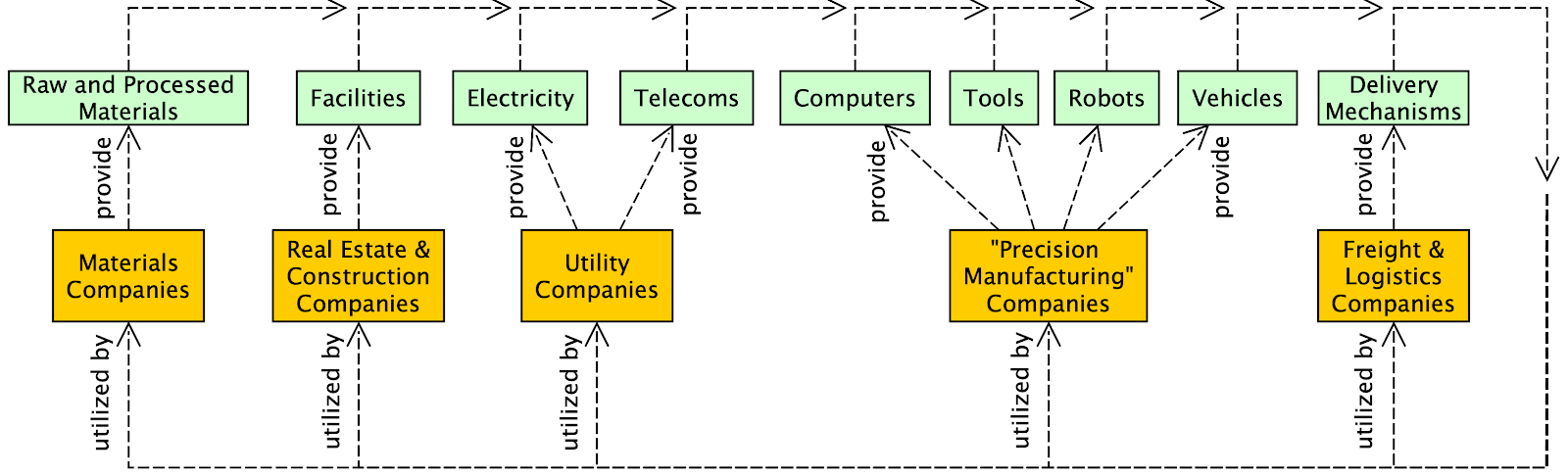

The original text contained 1 image which was described by AI.

---

First published:

October 12th, 2024

Source:

https://www.lesswrong.com/posts/Kobbt3nQgv3yn29pr/my-theory-of-change-for-working-in-ai-healthtech

---

Narrated by TYPE III AUDIO.

---

…

continue reading

My main concerns

I think AGI is going to be developed soon, and quickly. Possibly (20%) that's next year, and most likely (80%) before the end of 2029. These are not things you need to believe for yourself in order to understand my view, so no worries if you're not personally convinced of this.

(For what it's worth, I did arrive at this view through years of study and research in AI, combined with over a decade of private forecasting practice [...]

---

Outline:

(00:28) My main concerns

(03:41) Extinction by industrial dehumanization

(06:00) Successionism as a driver of industrial dehumanization

(11:08) My theory of change: confronting successionism with human-specific industries

(15:53) How I identified healthcare as the industry most relevant to caring for humans

(20:00) But why not just do safety work with big AI labs or governments?

(23:22) Conclusion

The original text contained 1 image which was described by AI.

---

First published:

October 12th, 2024

Source:

https://www.lesswrong.com/posts/Kobbt3nQgv3yn29pr/my-theory-of-change-for-working-in-ai-healthtech

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.378 episoade