Treceți offline cu aplicația Player FM !

“Summary of Situational Awareness - The Decade Ahead” by OscarD🔸

Manage episode 430873131 series 3281452

Original by Leopold Aschenbrenner, this summary is not commissioned or endorsed by him.

Short Summary

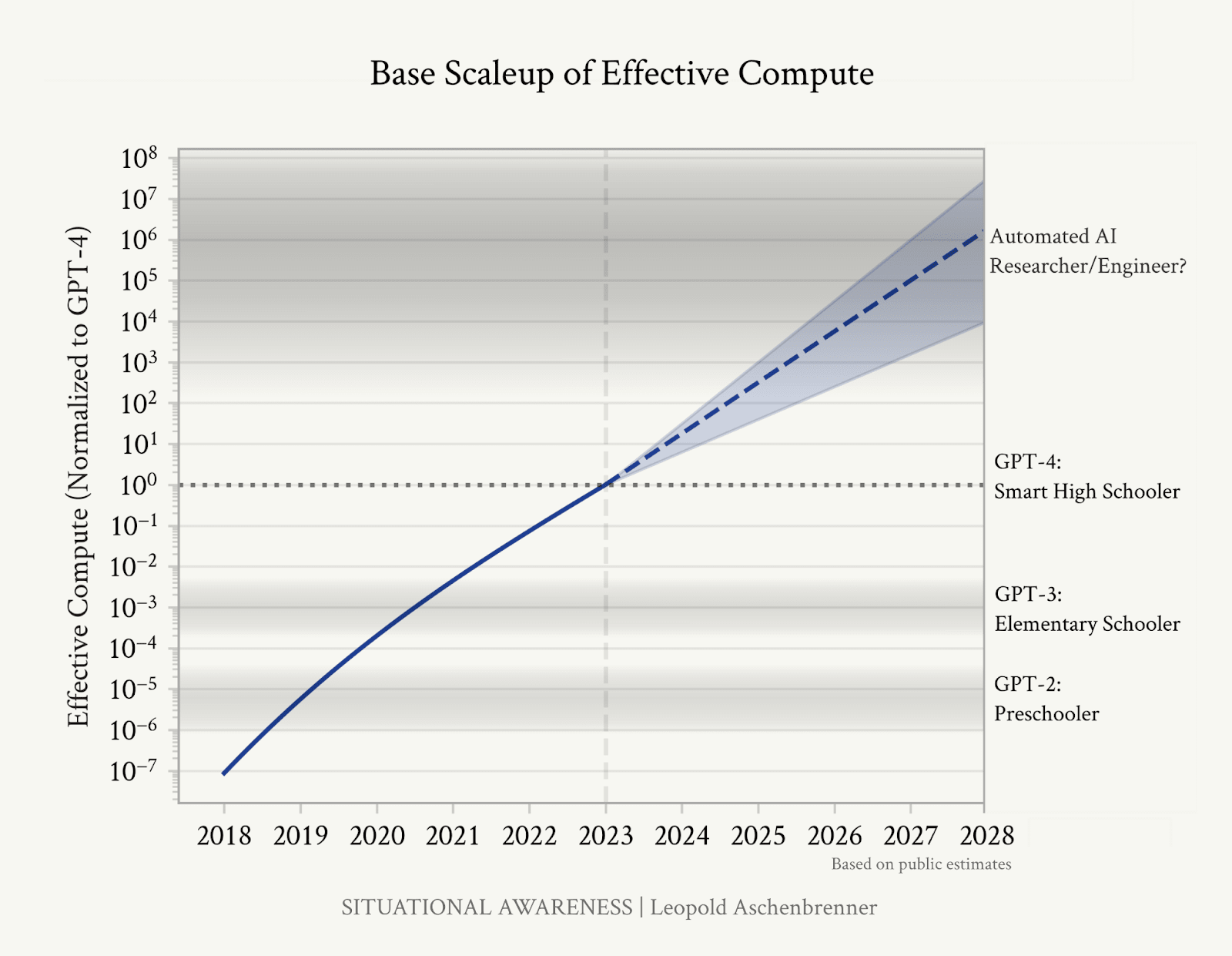

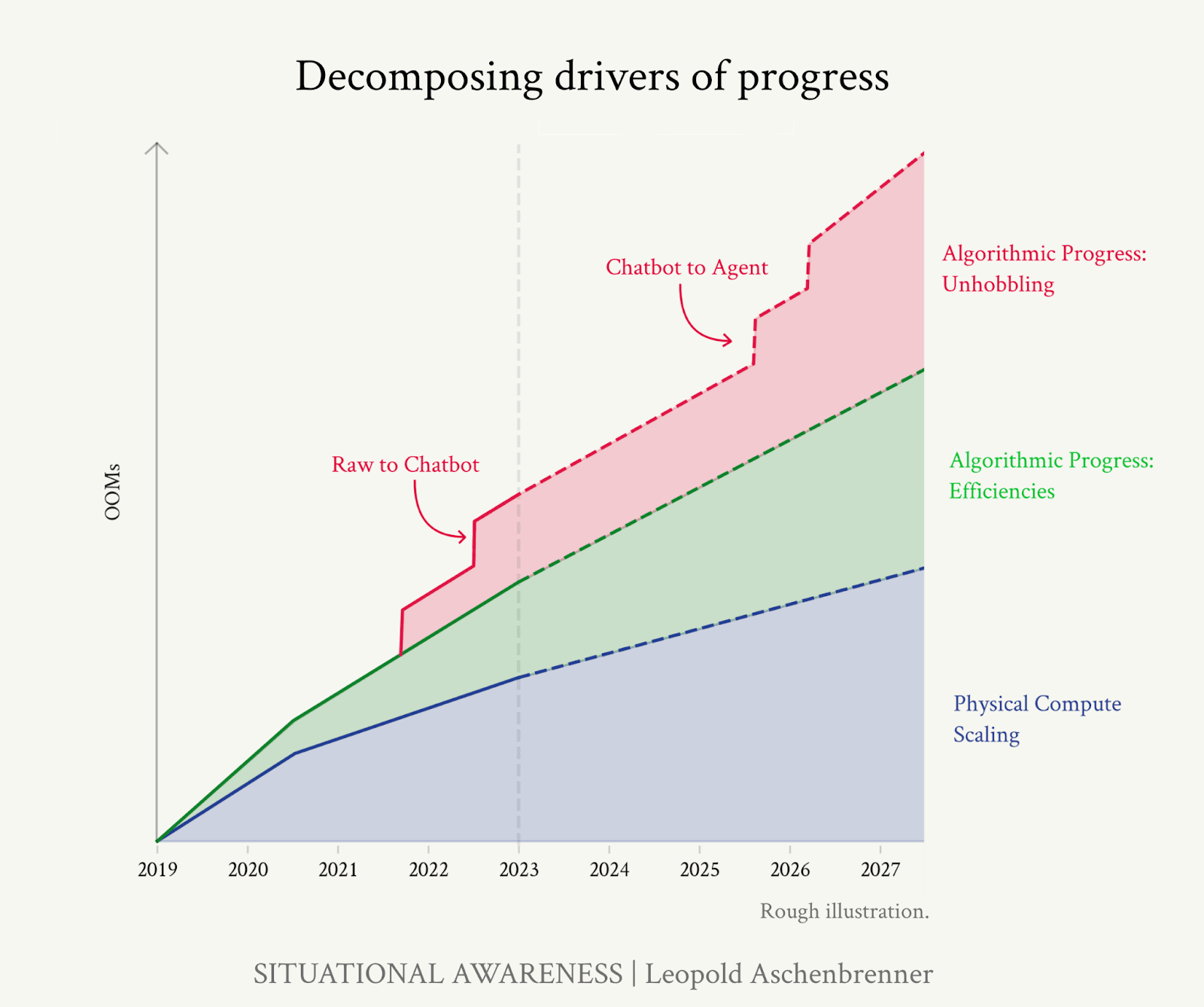

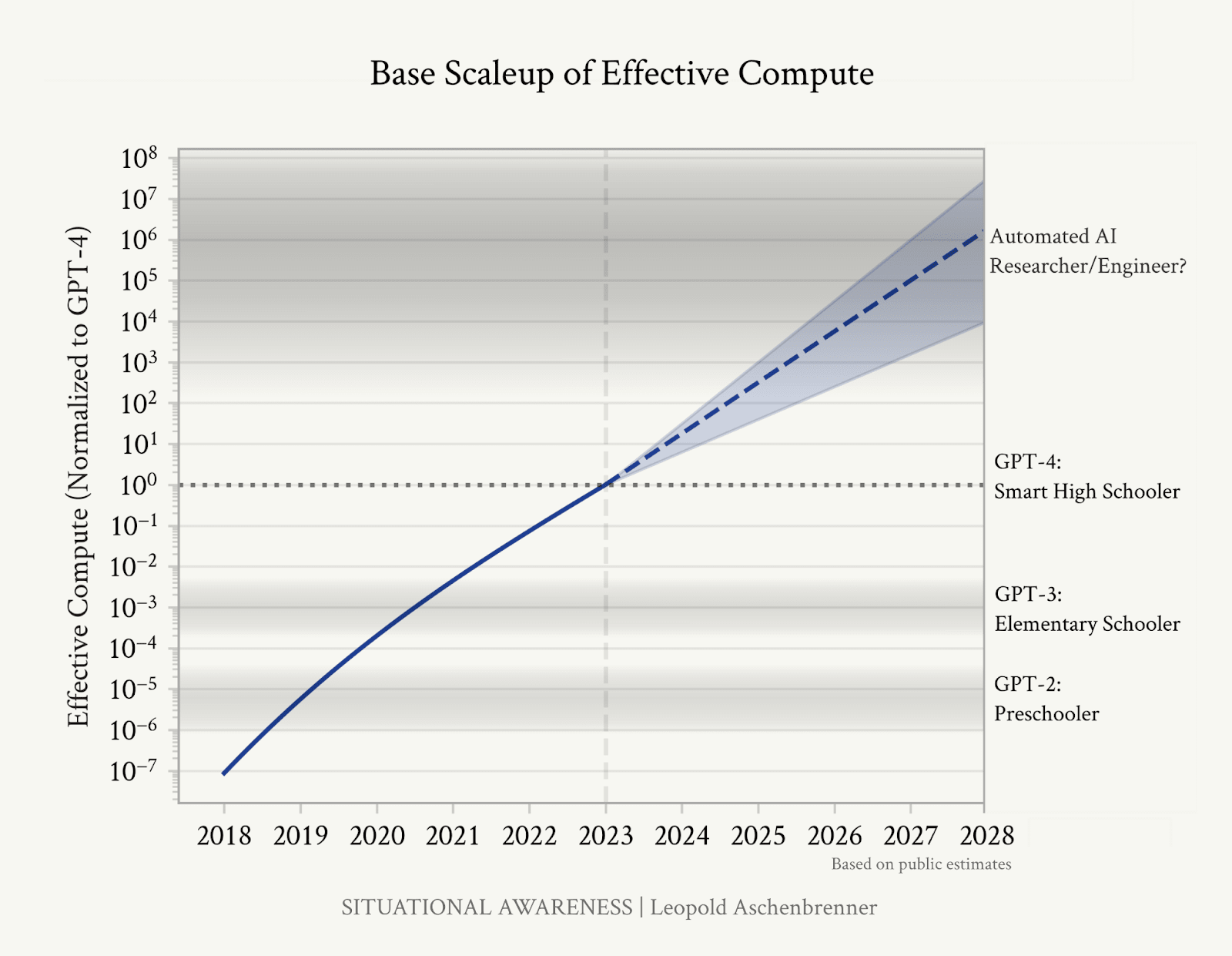

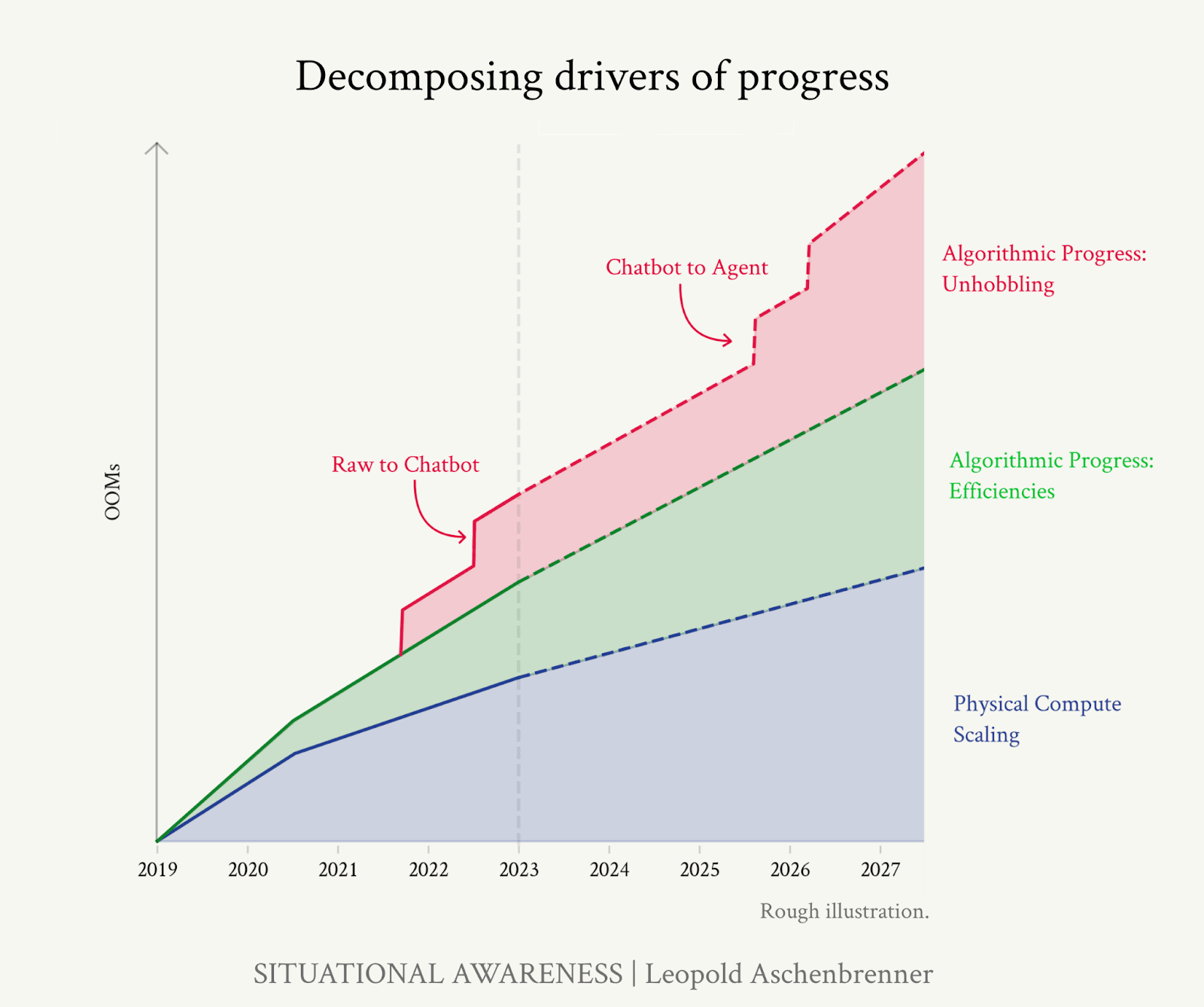

- Extrapolating existing trends in compute, spending, algorithmic progress, and energy needs implies AGI (remote jobs being completely automatable) by ~2027.

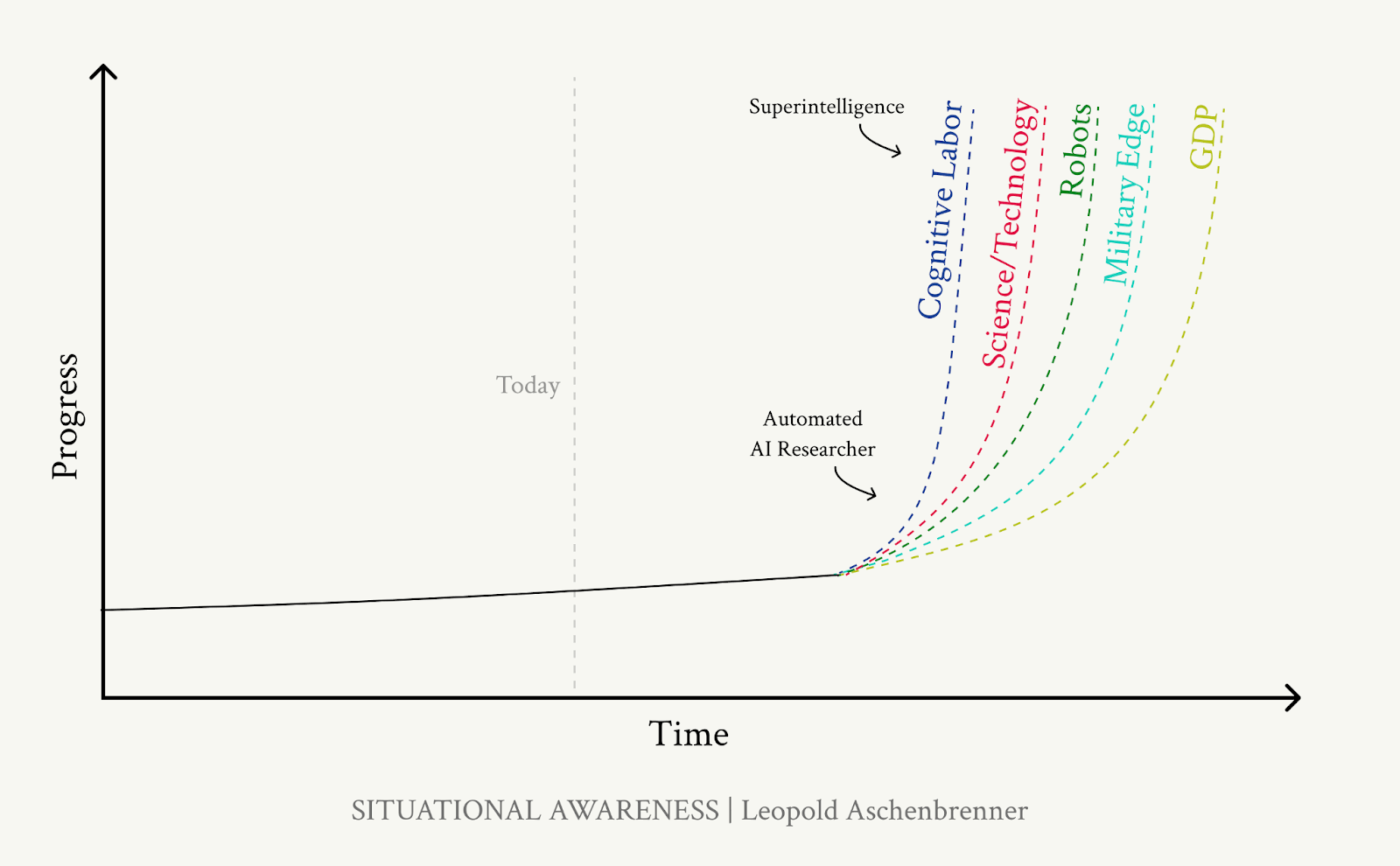

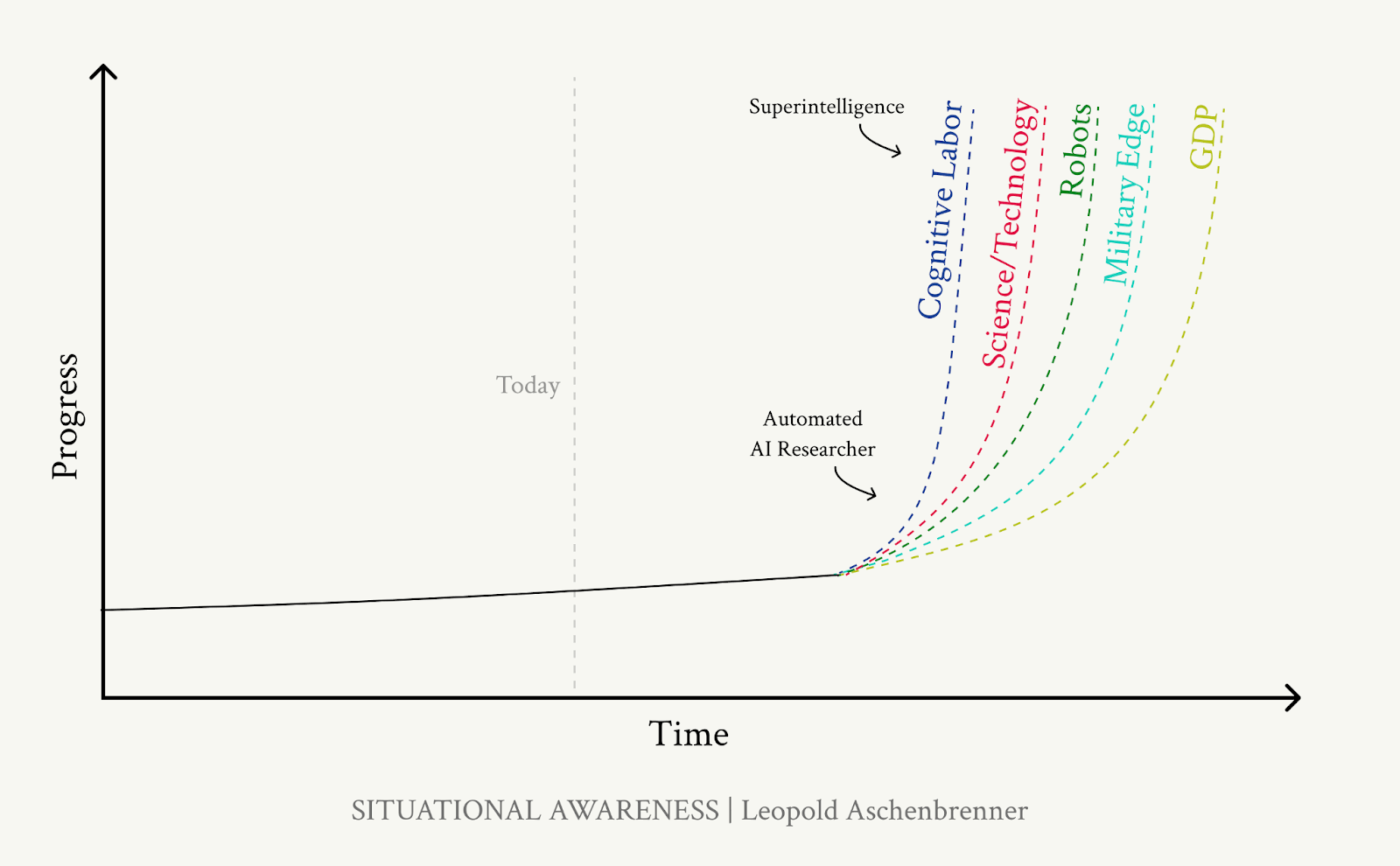

- AGI will greatly accelerate AI research itself, leading to vastly superhuman intelligences being created ~1 year after AGI.

- Superintelligence will confer a decisive strategic advantage militarily by massively accelerating all spheres of science and technology.

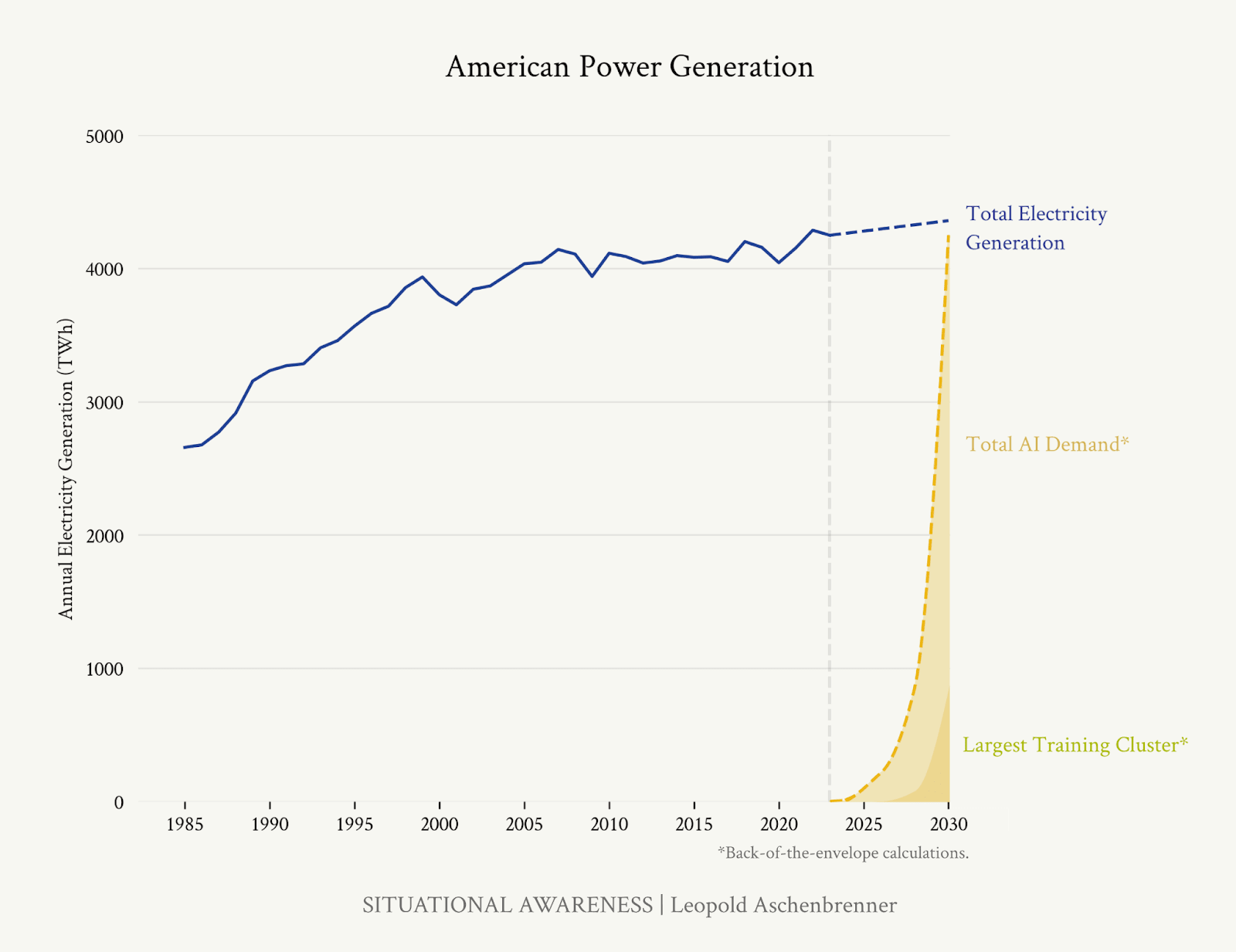

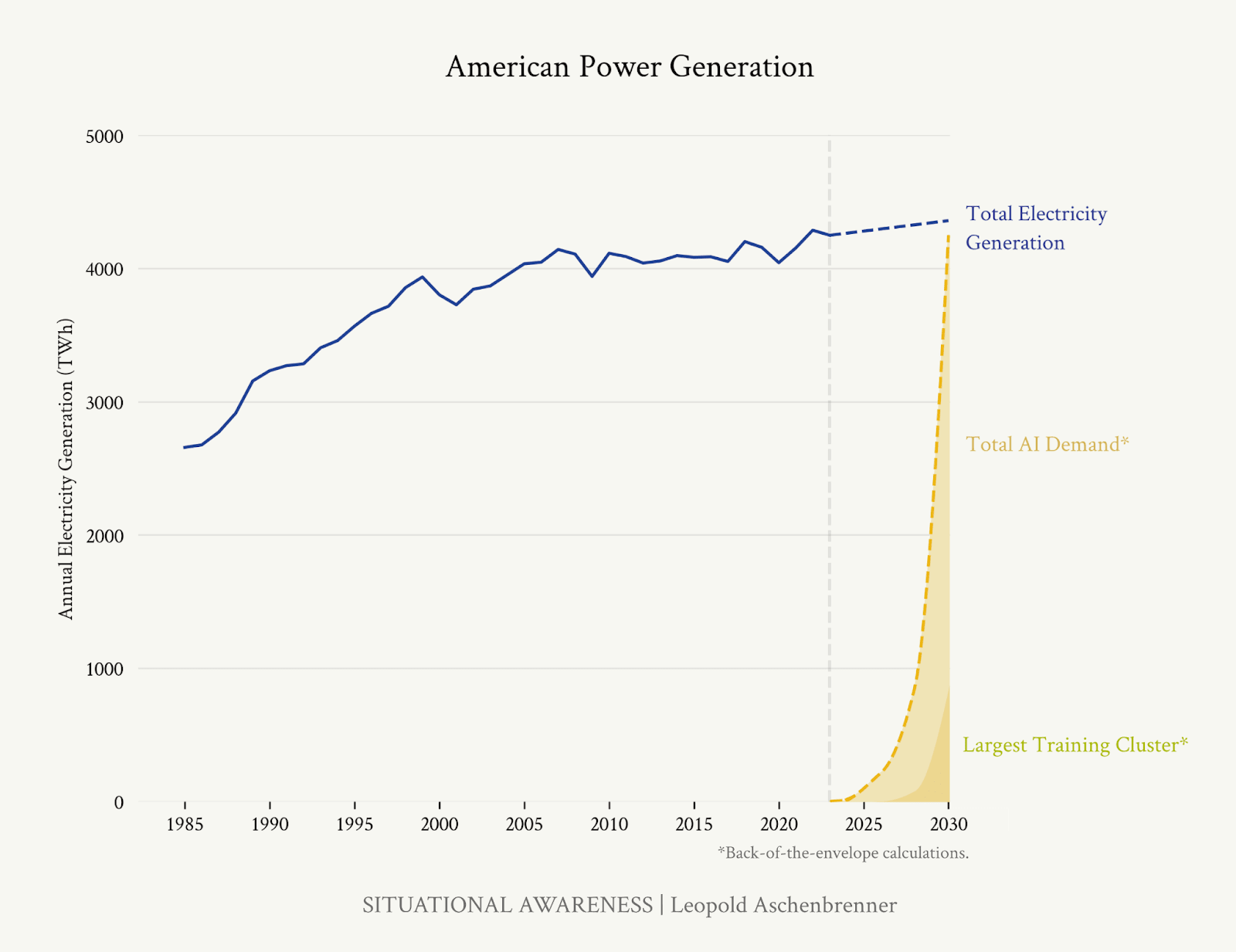

- Electricity use will be a bigger bottleneck on scaling datacentres than investment, but is still doable domestically in the US by using natural gas.

- AI safety efforts in the US will be mostly irrelevant if other actors steal the model weights of an AGI. US AGI research must employ vastly better cybersecurity, to protect both model weights and algorithmic secrets.

- Aligning superhuman AI systems is a difficult technical challenge, but probably doable, and we must devote lots of [...]

---

Outline:

(00:13) Short Summary

(02:16) 1. From GPT-4 to AGI: Counting the OOMs

(02:24) Past AI progress

(05:38) Training data limitations

(06:42) Trend extrapolations

(07:58) The modal year of AGI is soon

(09:30) 2. From AGI to Superintelligence: the Intelligence Explosion

(09:37) The basic intelligence explosion case

(10:47) Objections and responses

(14:07) The power of superintelligence

(16:29) III The Challenges

(16:32) IIIa. Racing to the Trillion-Dollar Cluster

(21:12) IIIb. Lock Down the Labs: Security for AGI

(21:20) The power of espionage

(22:24) Securing model weights

(24:01) Protecting algorithmic insights

(24:56) Necessary steps for improved security

(26:50) IIIc. Superalignment

(29:41) IIId. The Free World Must Prevail

(32:41) 4. The Project

(35:12) 5. Parting Thoughts

(36:17) Responses to Situational Awareness

The original text contained 1 footnote which was omitted from this narration.

---

First published:

June 8th, 2024

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

256 episoade

Manage episode 430873131 series 3281452

Original by Leopold Aschenbrenner, this summary is not commissioned or endorsed by him.

Short Summary

- Extrapolating existing trends in compute, spending, algorithmic progress, and energy needs implies AGI (remote jobs being completely automatable) by ~2027.

- AGI will greatly accelerate AI research itself, leading to vastly superhuman intelligences being created ~1 year after AGI.

- Superintelligence will confer a decisive strategic advantage militarily by massively accelerating all spheres of science and technology.

- Electricity use will be a bigger bottleneck on scaling datacentres than investment, but is still doable domestically in the US by using natural gas.

- AI safety efforts in the US will be mostly irrelevant if other actors steal the model weights of an AGI. US AGI research must employ vastly better cybersecurity, to protect both model weights and algorithmic secrets.

- Aligning superhuman AI systems is a difficult technical challenge, but probably doable, and we must devote lots of [...]

---

Outline:

(00:13) Short Summary

(02:16) 1. From GPT-4 to AGI: Counting the OOMs

(02:24) Past AI progress

(05:38) Training data limitations

(06:42) Trend extrapolations

(07:58) The modal year of AGI is soon

(09:30) 2. From AGI to Superintelligence: the Intelligence Explosion

(09:37) The basic intelligence explosion case

(10:47) Objections and responses

(14:07) The power of superintelligence

(16:29) III The Challenges

(16:32) IIIa. Racing to the Trillion-Dollar Cluster

(21:12) IIIb. Lock Down the Labs: Security for AGI

(21:20) The power of espionage

(22:24) Securing model weights

(24:01) Protecting algorithmic insights

(24:56) Necessary steps for improved security

(26:50) IIIc. Superalignment

(29:41) IIId. The Free World Must Prevail

(32:41) 4. The Project

(35:12) 5. Parting Thoughts

(36:17) Responses to Situational Awareness

The original text contained 1 footnote which was omitted from this narration.

---

First published:

June 8th, 2024

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

256 episoade

Toate episoadele

×Bun venit la Player FM!

Player FM scanează web-ul pentru podcast-uri de înaltă calitate pentru a vă putea bucura acum. Este cea mai bună aplicație pentru podcast și funcționează pe Android, iPhone și pe web. Înscrieți-vă pentru a sincroniza abonamentele pe toate dispozitivele.